Generate Ssh Key On A Cluster

Install Prerequisites

Gypsum-gateway logins are restricted to SSH keys. See the instructions below for how to generate a keypair for your operating system. NOTE: The instructions below describe how to generate a key pair without a passphrase. You must be a cluster administrator to perform this task. Enable local administrator accounts to access an SVM using an SSH public key: security login create -vserver SVMname-user-or-group-name userorgroupname-application application. security login create -vserver engData1 -user-or-group-name svmadmin1 -application ssh -authmethod.

To create a key pair using a third-party tool. Generate a key pair with a third-party tool of your choice. Save the public key to a local file. For example, /.ssh/my-key-pair.pub (Linux) or C: keys my-key-pair.pub (Windows). The file name extension for this file is not important. To do this, we use SSH keys. This can be a little laborious, but only needs to be done once. On each node, run the following: ssh-keygen -t rsa. This creates a unique digital ‘identity’ (and key pairs) for the computer. You’ll be asked a few questions; just press RETURN for each one and do not create a passphrase when asked. To create and use this helper pod, complete the following steps: Run a debian container image and attach a terminal session to it. Once the terminal session is connected to the container, install an SSH client using apt-get. Open a new terminal. To create an SSH connection authenticated with a private key file, you need to specify the Amazon EC2 key pair private key when you launch a cluster. If you launch a cluster from the console, the Amazon EC2 key pair private key is specified in the Security and Access section on the Create Cluster page.

All the commands in this guide require both the Azure CLI and aks-engine. Follow the installation instructions to download aks-engine before continuing or compile from source.

For installation instructions see the Azure CLI GitHub repository for the latest release.

Overview

aks-engine reads a cluster definition which describes the size, shape, and configuration of your cluster. This guide takes the default configuration of one master and two Linux agents. If you would like to change the configuration, edit examples/kubernetes.json before continuing.

The aks-engine deploy command automates creation of a Service Principal, Resource Group and SSH key for your cluster. If operators need more control or are interested in the individual steps see the 'Long Way' section below.

NOTE: AKS Engine creates a cluster; it doesn't create an Azure Container Service resource. So clusters that you create using the aks-engine command (or ARM templates generated by the aks-engine command) won't show up as AKS resources, for example when you run az acs list. Think of aks-engine as the, er, engine which AKS uses to create clusters: you can use the same engine yourself, but AKS won't know about the results.

After the cluster is deployed the upgrade and scale commands can be used to make updates to your cluster.

Gather Information

- The subscription in which you would like to provision the cluster. This is a uuid which can be found with

az account list -o table. - Proper access rights within the subscription. Especially the right to create and assign service principals to applications ( see AKS Engine the Long Way, Step #2)

- A valid service principal with all the required create/manage permissions. Instructions to create a new service principal can be found here.

- A

dnsPrefixwhich forms part of the the hostname for your cluster (e.g. staging, prodwest, blueberry). The DNS prefix must be unique so pick a random name. - A location to provision the cluster e.g.

westus2.

Deploy

For this example, the subscription id is 51ac25de-afdg-9201-d923-8d8e8e8e8e8e, the DNS prefix is contoso-apple, and location is westus2.

Run aks-engine deploy with the appropriate arguments:

aks-engine will output Azure Resource Manager (ARM) templates, SSH keys, and a kubeconfig file in _output/contoso-apple-59769a59 directory:

Generate Ssh Key On A Cluster Diagram

_output/contoso-apple-59769a59/azureuser_rsa_output/contoso-apple-59769a59/kubeconfig/kubeconfig.westus2.json

aks-engine generates kubeconfig files for each possible region. Access the new cluster by using the kubeconfig generated for the cluster's location. This example used westus2, so the kubeconfig is _output/<clustername>/kubeconfig/kubeconfig.westus2.json:

Administrative note: By default, the directory where aks-engine stores cluster configuration (_output/contoso-apple above) won't be overwritten as a result of subsequent attempts to deploy a cluster using the same --dns-prefix) To re-use the same resource group name repeatedly, include the --force-overwrite command line option with your aks-engine deploy command. On a related note, include an --auto-suffix option to append a randomly generated suffix to the dns-prefix to form the resource group name, for example if your workflow requires a common prefix across multiple cluster deployments. Using the --auto-suffix pattern appends a compressed timestamp to ensure a unique cluster name (and thus ensure that each deployment's configuration artifacts will be stored locally under a discrete _output/<resource-group-name>/ directory).

Note: If the cluster is using an existing VNET please see the Custom VNET feature documentation for additional steps that must be completed after cluster provisioning.

The deploy command lets you override any values under the properties tag (even in arrays) from the cluster definition file without having to update the file. You can use the --set flag to do that. For example:

AKS Engine the Long Way

Step 1: Generate an SSH Key

In addition to using Kubernetes APIs to interact with the clusters, cluster operators may access the master and agent machines using SSH.

If you don't have an SSH key cluster operators may generate a new one.

Step 2: Create a Service Principal

Kubernetes clusters have integrated support for various cloud providers as core functionality. On Azure, aks-engine uses a Service Principal to interact with Azure Resource Manager (ARM). Follow the instructions to create a new service principal and grant it the necessary IAM role to create Azure resources.

Step 3: Edit your Cluster Definition

AKS Engine consumes a cluster definition which outlines the desired shape, size, and configuration of Kubernetes. There are a number of features that can be enabled through the cluster definition: check the examples directory for a number of... examples.

Edit the simple Kubernetes cluster definition and fill out the required values:

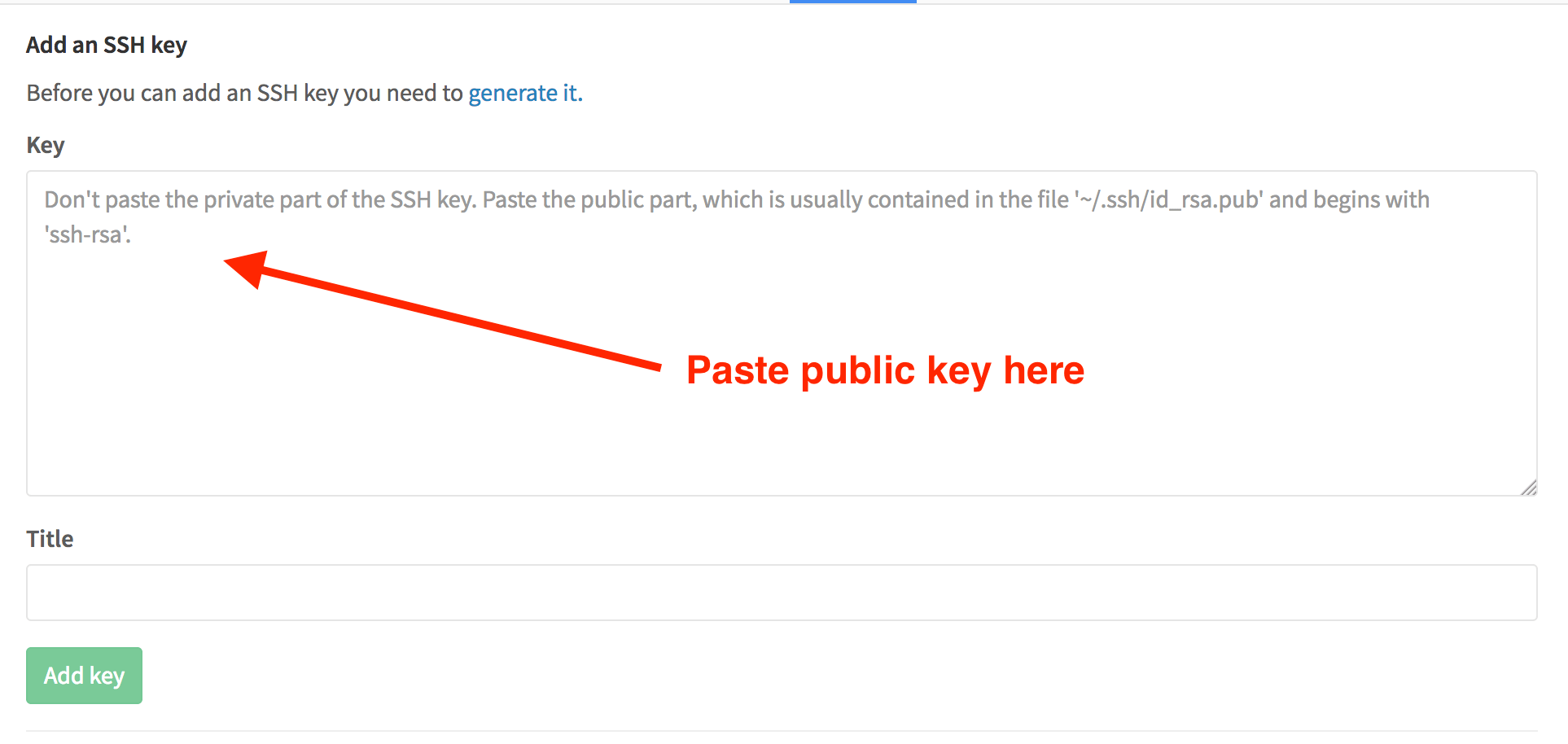

dnsPrefix: must be a region-unique name and will form part of the hostname (e.g. myprod1, staging, leapingllama) - be unique!keyData: must contain the public portion of an SSH key - this will be associated with theadminUsernamevalue found in the same section of the cluster definition (e.g. 'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABA....')clientId: this is the service principal's appId uuid or name from step 2secret: this is the service principal's password or randomly-generated password from step 2

Optional: attach to an existing virtual network (VNET). Details here

Note: you can then use the --set option of the generate command to override values from the cluster definition file directly in the command line (cf. Step 4)

Step 4: Generate the Templates

The generate command takes a cluster definition and outputs a number of templates which describe your Kubernetes cluster. By default, generate will create a new directory named after your cluster nested in the _output directory. If my dnsPrefix was larry my cluster templates would be found in _output/larry-.

Run aks-engine generate examples/kubernetes.json

The generate command lets you override values from the cluster definition file without having to update the file. You can use the --set flag to do that:

The --set flag only supports JSON properties under properties. You can also work with array, like the following:

Step 5: Submit your Templates to Azure Resource Manager (ARM)

- To enable the optional network policy enforcement using calico, you have to set the parameter during this step according to this guide

- To enable the optional network policy enforcement using cilium, you have to set the parameter during this step according to this guide

- To enable the optional network policy enforcement using antrea, you have to set the parameter during this step according to this guide

Note: If the cluster is using an existing VNET please see the Custom VNET feature documentation for additional steps that must be completed after cluster provisioning.

Checking VM tags

First we get list of Master and Agent VMs in the cluster

Once we have the VM Names, we can check tags associated with any of the VMs using the command below

So you have spent your time in pseudo mode and you have finally started moving to your own cluster? Perhaps you just jumped right into the cluster setup? In any case, a distributed Hadoop cluster setup requires your “master” node [name node & job tracker] to be able to SSH (without requiring a password, so key based authentication) to all other “slave” nodes (e.g. data nodes).

The need for SSH Key based authentication is required so that the master node can then login to slave nodes (and the secondary node) to start/stop them, etc. This is also required to be setup on the secondary name node (which is listed in your masters file) so that [presuming it is running on another machine which is a VERY good idea for a production cluster] will be started from your name node with ./start-dfs.sh and job tracker node with ./start-mapred.sh

Make sure you are the hadoop user for all of these commands. If you have not yet installed Hadoop and/or created the hadoop user you should do that first. Depending on your distribution (please follow it’s directions for setup) this will be slightly different (e.g. Cloudera creates the hadoop user for your when going through the rpm install).

First from your “master” node check that you can ssh to the localhost without a passphrase:

$ ssh localhost

If you cannot ssh to localhost without a passphrase, execute the following commands:

$ ssh-keygen -t dsa -P “” -f ~/.ssh/id_dsa

$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

On your master node try to ssh again (as the hadoop user) to your localhost and if you are still getting a password prompt then.

$ chmod go-w $HOME $HOME/.ssh

$ chmod 600 $HOME/.ssh/authorized_keys

$ chown `whoami` $HOME/.ssh/authorized_keys

Now you need to copy (however you want to-do this please go ahead) your public key to all of your “slave” machine (don’t forget your secondary name node). It is possible (depending on if these are new machines) that the slave’s hadoop user does not have a .ssh directory and if not you should create it ($ mkdir ~/.ssh)

$ scp ~/.ssh/id_dsa.pub slave1:~/.ssh/master.pub

Now login (as the hadoop user) to your slave machine. While on your slave machine add your master machine’s hadoop user’s public key to the slave machine’s hadoop authorized key store.

$ cat ~/.ssh/master.pub >> ~/.ssh/authorized_keys

Now, from the master node try to ssh to slave.

$ssh slave1

If you are still prompted for a password (which is most likely) then it is very often just a simple permission issue. Go back to your slave node again and as the hadoop user run this

Ssh Generate Key Pair

$ chmod go-w $HOME $HOME/.ssh

$ chmod 600 $HOME/.ssh/authorized_keys

$ chown `whoami` $HOME/.ssh/authorized_keys

Try again from your master node.

Generate Ssh Rsa Key

$ssh slave1

Generate Ssh Key On A Cluster Line

And you should be good to go. Repeat for all Hadoop Cluster Nodes.

Generate Ssh Key On A Cluster Box

[tweetmeme http://wp.me/pTu1i-29%5D

Generate Ssh Key On A Cluster Line

/*

Joe Stein

http://www.linkedin.com/in/charmalloc

*/